Floating point math is in general pretty excellent. It generally has hardware support, and it is capable of representing both very large and very small numbers, and it has a fixed number of binary significant figures. It is generally a suitable approximation/replacement for a true "real" number in programming. But it does have some flaws. It is much more precise near zero, so if the numbers we want to represent have a small fixed range (say 0 to 1), the numbers near the 0 side will typically have less error than the other side of the range. Enter fixed point math.

Rather than using what floating point math, which we can nicely analog to a fixed-field-width binary scientific notation for numbers, we simply pick a fraction to use as the smallest division, and each number is just an integer multiple of that. Typically the fraction will be some power of 2 to match nicely our binary numbers. This is in essence fixing the location of the radix point (decimal point in base 10, binary/bicimal point in base 2). Using this formulation, values in a fixed range, especially one starting at 0 or symmetric around 0, can nicely be represented with uniform precision throughout the range.

So, why isn't it more common? Well, it actually is used in practice with some frequency, but typically disguised as units. Consider the c++ sleep function, taking a time in milliseconds. This is just a particular fixed–point representation of a time in seconds, with a fixed 1/1000 as the spacing. Another common example is storing prices in terms of cents – there is no smaller value to a dollar than a cent (I suppose, though gas prices seem to indicate otherwise) – so we can represent exactly any price up to the maximum we can store exactly using an integer number of cents.

But why aren't they used more commonly? Principally because fast floating point hardware is available, and seems to have much better software support. So while you can write code to used fixed point arithmetic, you have limited support from libraries, at least in c++.

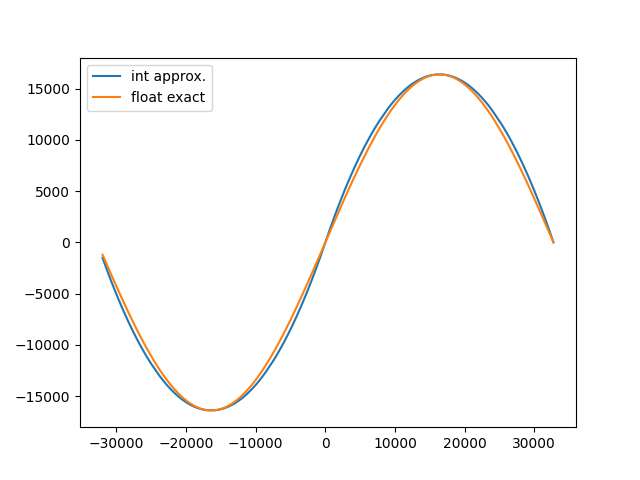

But because of the advantage in the uniform precision, I'm interested in maybe trying out writing a fixed point library at some point, especially for numbers 0 to 1 or -1 to 1. There are certainly some nice approximations that can be done with it. For example, a piecewise-parabolic approximation of sine can be done with a multiplication, two bit-shifts, and one subtraction, which gives fairly decent results.

(angle<<1) - ((angle*abs(angle))>>14)

In terms of the use of this, I think my main one might be using it to represent the phase in the CharacterIK code. Then I don't have to litter the rest of the code with a bunch of floating point mod calls (granted, wrapped more accessibly in Urho3D::AbsMod). I can just rely on the integer overflowing.

Aside: In some ways the idea of using a fixed absolute precision rather than a fixed relative precision reminds me of the idea of intuitionist mathematics, which holds that there isn't infinite precision to numbers.